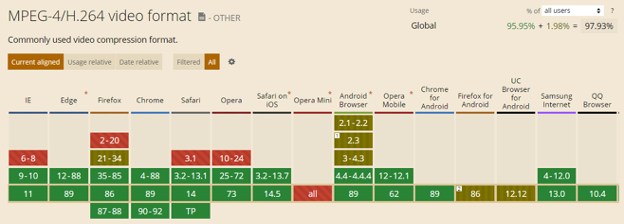

During the last decade, the majority of video streams sent over the Internet were encoded using the ITU-T H.264 / MPEG-4 AVC video codec. Developed in the early 2000s, this codec has become broadly supported on a variety of devices and computing platforms with a remarkable 97.93% reach.

However, as far as technology is concerned, this codec is pretty old. In recent years – two new codecs have been introduced: HEVC from the ITU-T and MPEG standards groups, and AV1 from Alliance for Open Media. Both claim at least a 50% gain in compression efficiency over the H.264/AVC.

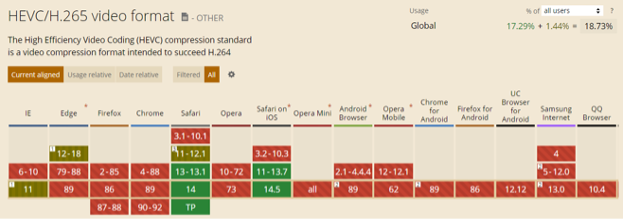

In theory, such gains should lead to a significant reduction in the costs of streaming. However, in practice, these new codecs can only reach particular subsets of the existing devices or web browsers. HEVC for example reportedly only has a reach of 18.73%, pointing mostly to Apple devices, and devices with hardware HEVC support. The AV1’s support among web browsers is higher, but notably, it is not supported by Apple devices and most existing set-top box platforms.

This situation begs the question: Given such a fragmented support of new codecs across different devices, how do you design a streaming system to reach all devices and with the highest possible efficiency?

In this blog post, we will try to answer this question by introducing the concept of multi-codec streaming and explaining key elements and technologies that we have engineered to support the Brightcove Video Cloud platform.

ADAPTIVE BITRATE STREAMING 101

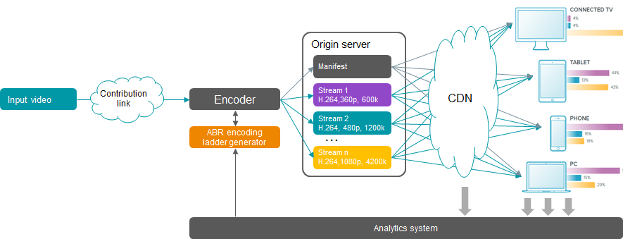

Before we start talking about multi-codec streaming, let’s briefly review the main principles of the operations of modern-era Adaptive Bit-Rate (ABR) streaming systems. We show a conceptual diagram of such a system in the figure below. For simplicity, we will focus on the VOD delivery case.

When a video asset is prepared for ABR streaming, it is typically transcoded into several renditions (or variant streams). Such renditions typically have different bitrates, resolutions, and other codecs- and presentation-level parameters.

Once all renditions are generated, they are placed on the origin server. Along with the set of renditions, the origin server also receives a special manifest file, describing the properties of the encoded streams. Such manifests are typically presented in HLS or MPEG DASH formats. The subsequent delivery of the encoded content to user devices is done over HTTP and by using a Content Delivery Network (CDN), ensuring the reliability and scalability of the delivery system.

To play the video content, user devices use special software, called a streaming client. In the simplest form, a streaming client can be JavaScript run by a web browser. It may also be a custom application or a video player supplied by the operating system (OS). But regardless of the implementation, most streaming clients include logic for adaptive selection of streams/renditions during playback.

For example, if the client notices that the observed network bandwidth is too low to support real-time playback of the current stream, it may decide to switch to a lower bitrate stream. This prevents buffering. Otherwise, if there is sufficient bandwidth, the client may choose to switch to a higher bitrate, meaning a higher quality stream, and leads to a better quality of experience. This logic is what makes streaming delivery adaptive. It is also the reason why videos are always transcoded into multiple (typically 5-10) streams.

The system depicted in the above diagram has two additional components: The analytics system, which collects the playback statistics from CDNs and streaming clients, and the ABR encoding ladder generator, defining the number and properties of renditions to create. In the Brightcove Video Cloud system, this block corresponds to our Context-Aware Encoding (CAE) module.

ENCODING LADDERS AND QUALITY ACHIEVABLE BY THE STREAMING SYSTEM

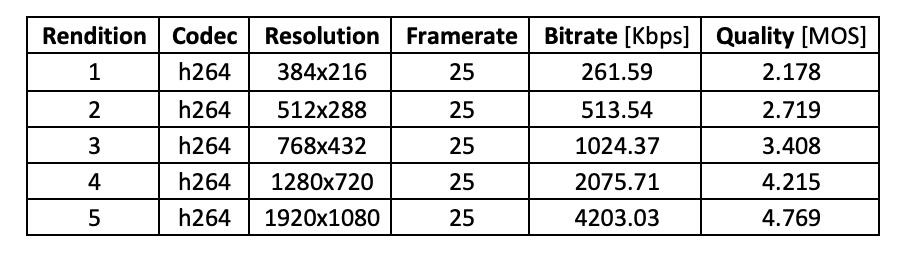

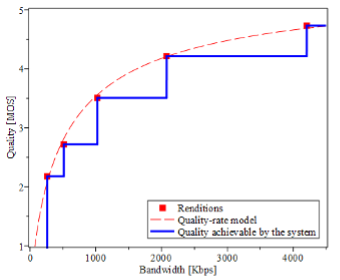

Let us now consider an example of an encoding ladder that may be used for streaming. This particular example was created by Brightcove CAE for action movie video content.

As easily observed, the encoding ladder defines five streams, enabling delivery of video with resolutions from 216p to 1080p and using about 260 to 4200Kbps in bandwidth. All streams are produced by the H.264/AVC codec. The last column in this table lists perceived visual quality scores as estimated for playback of these renditions on a PC screen. These values are reported using the Mean Opinion Score (MOS) scale. MOS score five means excellent quality, while score one means that the quality is bad.

We next plot (bitrate, quality)- points corresponding to renditions, as well as the best quality achievable by the streaming system with varying network bandwidth. This becomes a step function, shown in blue.

In the above figure, we also include a plot of the so-called quality-rate model function [1-3], describing the best possible quality values that may be achieved by the encoding of the same content with the same encoder. This function is shown by a dashed red curve.

As can be easily grasped, with a proper ladder design, the rendition points become a subset of points from the quality-rate model, and the step function describing quality achievable by streaming becomes an approximation of this model. What influences the quality of the streaming system is the number of renditions in the encoding ladder, as well placement of renditions along the bandwidth axis. The closer the resulting step function is to the quality-rate model – the better the quality that can be delivered by the streaming system.

What this all means is that encoding profiles/ladders for ABR streaming must be carefully designed. This is the reason why most modern streaming systems employ special profile generators to perform this step dynamically, by accounting for properties of the content, networks, and other relevant contexts.

Additional details about mathematical methods that can be employed for the construction of quality-rate models and the generation of optimal encoding ladders can be found in references [1-5].

MULTI-CODEC STREAMING: MAIN PRINCIPLES

Now that we’ve explained key concepts, we can turn our attention to multi-codec streaming.

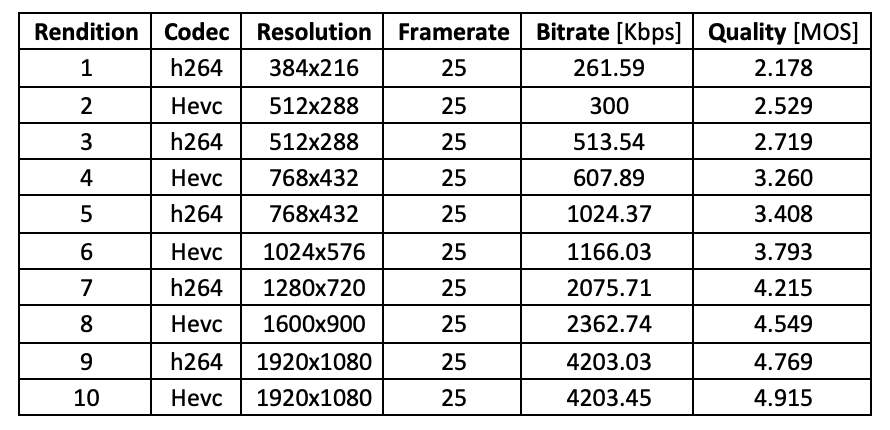

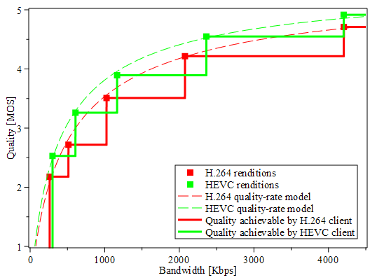

To make this more specific, let’s consider an example of an encoding ladder, generated using two codecs: H.264/AVC and HEVC. Again, Brightcove CAE was used to produce it.

The plots of rendition points, quality-rate models, and quality achievable by streaming clients decoding H.264 and HEVC streams are presented in the below figure.

As easily observed, the quality-rate model function for HEVC is consistently better than the quality-rate model for H.264/AVC. By the same token, HEVC renditions should also deliver better quality-rate tradeoffs than renditions encoded using H.264/AVC encoder.

However, considering that there are typically only a few rendition points and they may be placed sparsely and in an interleaved pattern, this may create regions of bitrates, where H.264/AVC renditions may deliver better quality than nearest HEVC rendition of smaller or equal bitrate. Such regions in the above figure are seen when step-functions for H.264/AVC clients go above the same functions for HEVC clients.

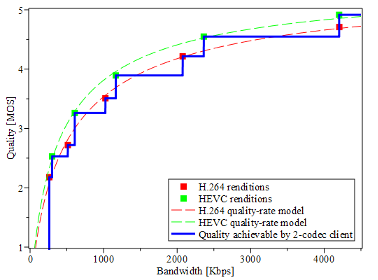

What does this mean? It means that with a two-codec ladder decoding of only HEVC-encoded streams does not automatically result in the best possible quality! Even better quality may be achieved by clients that selectively and intelligently switch between both H.264/AVC and HEVC streams. We illustrate quality achievable by such “two-codec clients” in the graphic below.

In this example, the two-codec client can make nine adaptation steps instead of just five in HEVC or H.264-only ladders. This enables better utilization of the available network bandwidth and delivery of better quality overall.

MULTI-CODEC FUNCTIONALITY SUPPORT IN EXISTING STREAMING CLIENTS

As we just saw the ability of the streaming client not only to decode but also intelligently and seamlessly switch between H.264/AVC and HEVC streams is extremely important. This leads to better quality and allows for fewer streams/renditions to be generated, reducing the costs of streaming.

However, not all existing streaming clients have such a capability. The best known examples of clients doing it well are native players in recent Apple devices: iPhones, iPads, Mac computers, etc. They can decode and switch between H.264/AVC and HEVC streams seamlessly. Recent versions of Chrome and Firefox browsers support the so-called change Type method, which technically allows JavaScript-based streaming clients to implement switching between codecs.

Streaming clients in many platforms with hardware decoders, such as SmartTVs, set-top boxes, etc. can only decode either H.264/AVC or HEVC streams, and won’t switch to another codec during a streaming session. And naturally, there are plenty of legacy devices that can only decode H.264/AVC – encoded streams.

This fragmented space of streaming clients and their capabilities must be accounted for at the stage of encoding ladder generation, by properly defining HLS and DASH manifests, and design of the delivery system for multi-codec streaming. In the next section, we will briefly review how we addressed all these challenges in the Brightcove Video Cloud platform.

MULTI-CODEC SUPPORT IN BRIGHTCOVE VIDEOCLOUD PLATFORM

Brightcove Video Cloud is an end-to-end online video platform that includes all the building blocks of the ABR streaming system that we’ve reviewed in this blog post.

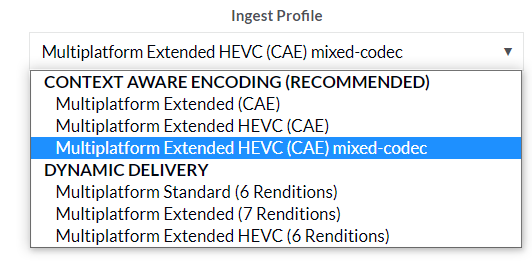

For example, encoding ladder generation in this system is done by using Brightcove CAE technology. For the user/operator of the system, it manifests itself by the presence of several pre-configured CAE ingest profiles enabling H.264-, HEVC-, as well as mixed-codec- streaming deployments.

When the Multiplatform Extended HEVC (CAE) mixed-codec ingest profile is selected, the result will be a mixed codec ladder, with both H.264 and HEVC streams present. This profile can produce from three to 12 output streams for both codecs, covering the range of resolutions from 180p to 1080p and with bitrates in the range of 250Kbps to 4200Kbps. The CAE profile generator defines everything else automatically, based on characteristics of the content as well as playback statistics observed for the account.

In Video Cloud, the manifests and media segments are produced according to a variety of streaming standards and profiles (e.g. HLS v3, HLS v7, MPEG DASH, Smooth, etc.). These are all generated dynamically, based on the preferences and capabilities of the receiving devices. Furthermore, certain filtering rules (delivery rules) may also be applied. For example, if a playback request is coming from a legacy device that can only support H.264/AVC and HLS v3 format of streaming, it will be only offer a HLS v3 manifest along with TS-based segments, and they will only include H.264/AVC encoded streams.

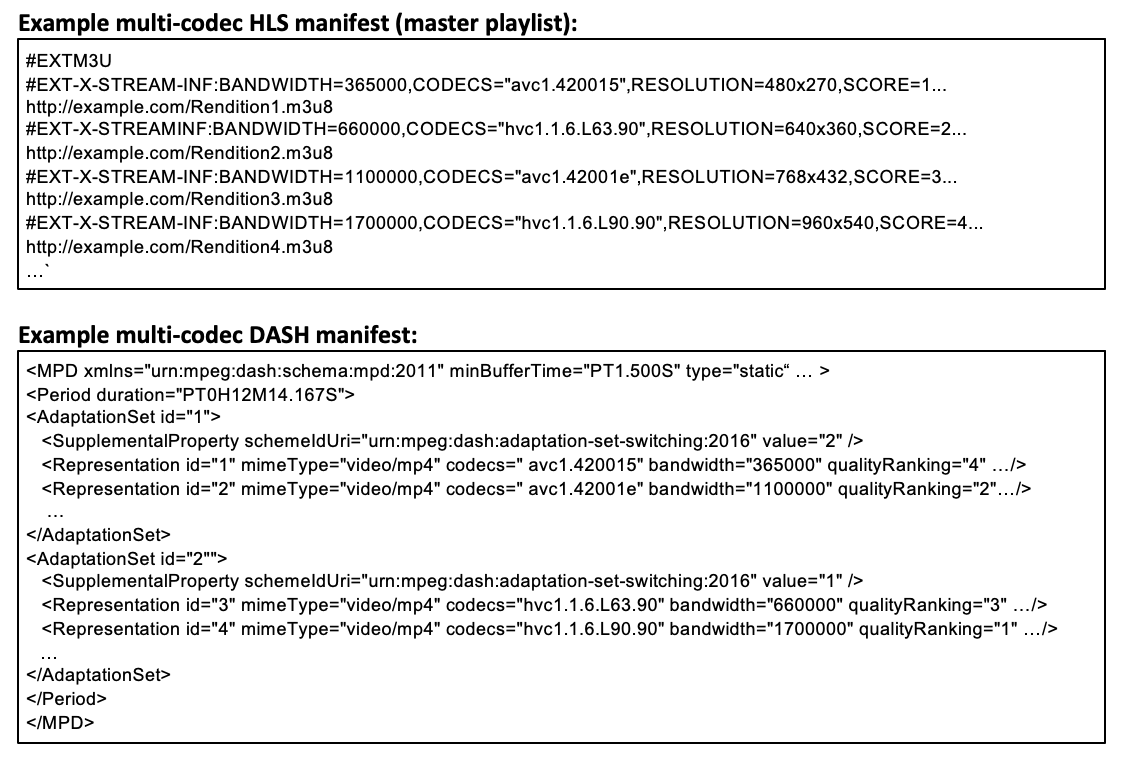

On the other hand, for newer devices capable of deciding both H.264/AVC and HEVC streams, the delivery system may produce a manifest including both H.264/AVC- and HEVC-encoded streams. The declaration of mixed codec streams in manifests is done according to the HLS and DASH-IF deployment guidelines. I show conceptual examples of such declarations below.

As observed, in HLS, mixed codec renditions can be included in the natural order in the master playlist. In MPEG DASH, however, they must be listed separately, in different adaptation sets, sorted according to each codec. To enable switching between mixed-codec renditions in DASH, a special SupplementalProperty descriptor is included in each adaptation set.

To assist clients in making the right decisions in switching between streams encoded using different codecs, special relative quality attributes can be used. In HLS, they are called SCORE attributes, with higher values indicating better quality. In MPEG DASH, they are called “Quality Ranking” attributes, but now with lower values indicating better quality. However, both of these attributes are optional and supported only by a few existing client devices. To make sure that all devices/clients don’t get confused in switching between multi-codec streams in Brightcove Video Cloud offers a manifest filtering option that leaves only renditions with progressively increasing quality values in the final manifests visible by the clients.

The final delivery of multi-codec streams in Video Cloud is handled by a two-level CDN configuration, ensuring high efficiency (low origin bit rate) and high scale and reliability of delivery of the streams. More details about various configurations and optimization techniques employed by the Brightcove Video Cloud can be found in our recent paper [3] or Brightcove product documentation.

CONCLUSIONS

With a combination of all described features and tools for multi-codec streaming support, enabling and deploying it with Brightcove Video Cloud can be done in a matter of minutes.

If you are delivering a high volume of streams to Apple devices or other HEVC-capable mobiles and set-top boxes – enabling the use of HEVC and multi-codec streaming can offer a considerable reduction in CDN traffic/costs, without compromising reach to legacy devices.

The tools that we have engineered ensure that such deployments will happen with minimum deployment costs and ensuring high quality and reliability of reaching all devices.

REFERENCES

[1] Y. Reznik, K. Lillevold, A. Jagannath, J. Greer, and J. Corley, “Optimal design of encoding profiles for ABR streaming,” Proc. Packet Video Workshop, Amsterdam, The Netherlands, June 12, 2018. [2] Y. Reznik, X. Li, K. Lillevold, A. Jagannath, and J. Greer, “Optimal Multi-Codec Adaptive Bitrate Streaming,” Proc. IEEE Int. Conf. Multimedia and Expo (ICME), Shanghai, China, July 8-12, 2019. [3] Y. Reznik, X. Li, K. Lillevold, R. Peck, T. Shutt, and P. Howard, “Optimizing Mass-Scale Multiscreen Video Delivery,” SMPTE Motion Imaging Journal, vol. 129, no. 3, pp. 26 – 38, 2020. [4] Y. Reznik, “Average Performance of Adaptive Streaming,” Proc. Data Compression Conference (DCC’21), Snowbird, UT, March 2021. [5] Y. Reznik, “Efficient multi-codec streaming” – talk at HPA Tech Retreat 2021