問題点

Brightcove Playerの新バージョンは、最高の再生エクスペリエンスを提供するために、常に最新のテクノロジを搭載してリリースされます。プレーヤーの新しい変更点をリアルタイムで分析するために、ブライトコーブは A/B 比較テストを実施し、これらの変更の影響をプレビューします。このブログ投稿では、A/B テスト プロセスの仕組みと、プレーヤーが常に可能な限り効率的に配信されるようにするための改善方法の概要を説明します。

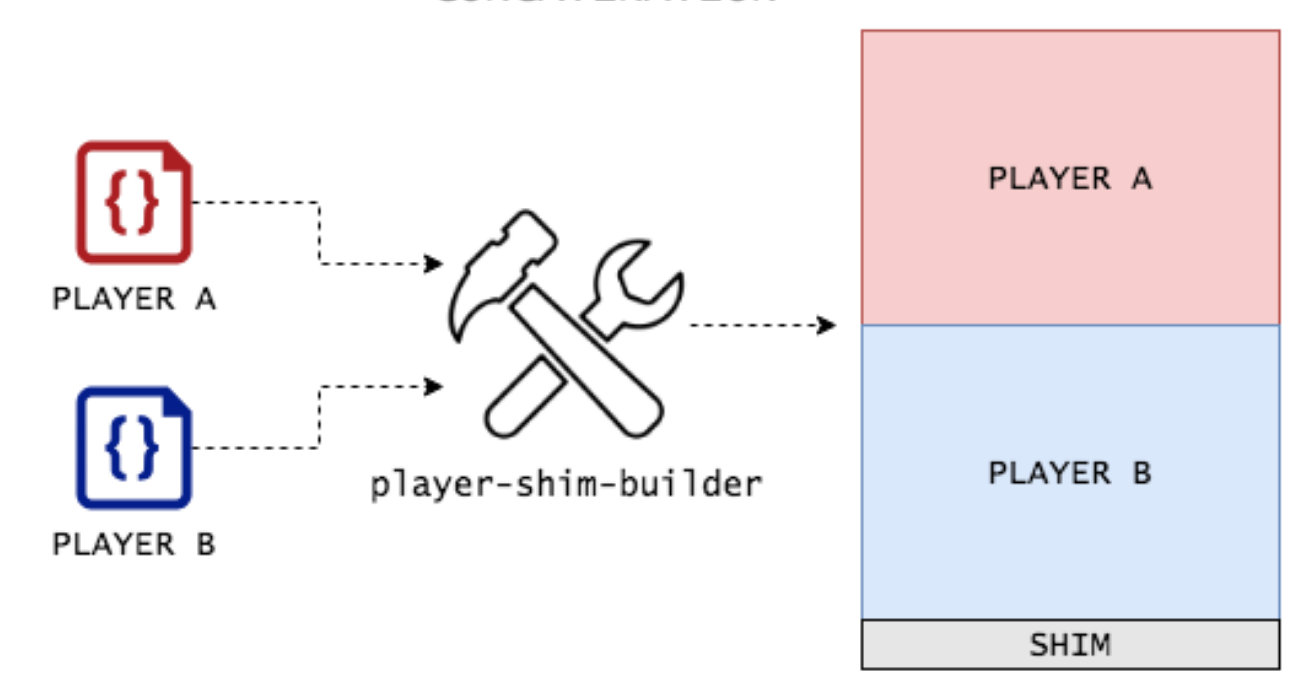

歴史的に、A/B 比較テストは、各プレーヤーの A バージョンと B バージョンをバンドルし、完全リリースの前に自動アップデートを有効にすることで実現してきました。技術的な観点から、これは A と B のプレーヤーのソース コードを連結し、私たちが「player-shim-builder」と呼ぶプロジェクトを通じて、実行時にどちらのプレーヤーを実行するかを決定する小さな「シム」を追加することで行われていました:

A/B テスト中に報告されたメトリクスを慎重に分析した後、Brightcove Player の新バージョンはグローバルに利用可能にするか、将来の検討のためにロールバックされました。このシステムは目標を達成しましたが、2 つのプレーヤーをバンドルすることで、エンド ユーザーのペイロード サイズが 2 倍になるという大きな問題がありました。遅いインターネット接続や古い携帯電話を持っている人なら誰でも、私たちのA/Bテストによってプレーヤーのロード時間が少し長くなったことに気づくことができました。プレーヤーの長期的なA/Bテストを容易にするためには、何かを変える必要がありました。

新しい希望

この問題に対処する秘策を明かす前に、レンペル・ツィブ・コーディング(LZ77) 圧縮アルゴリズムと、Brightcove Player の配信におけるその役割。事実上 すべての主要ブラウザ を通じてサポートする。 gzip Content-Encoding HTTPヘッダー。アルゴリズムの技術的な詳細を深く掘り下げることは、このブログ記事の範囲外ですが、アルゴリズムは、一連の繰り返し文字を見つけ、これらの共有ビットを参照するために特別なトークンを使用してデータを圧縮します。最適な圧縮率は、非常に類似した文字を持つデータが近くにあるときに達成される。

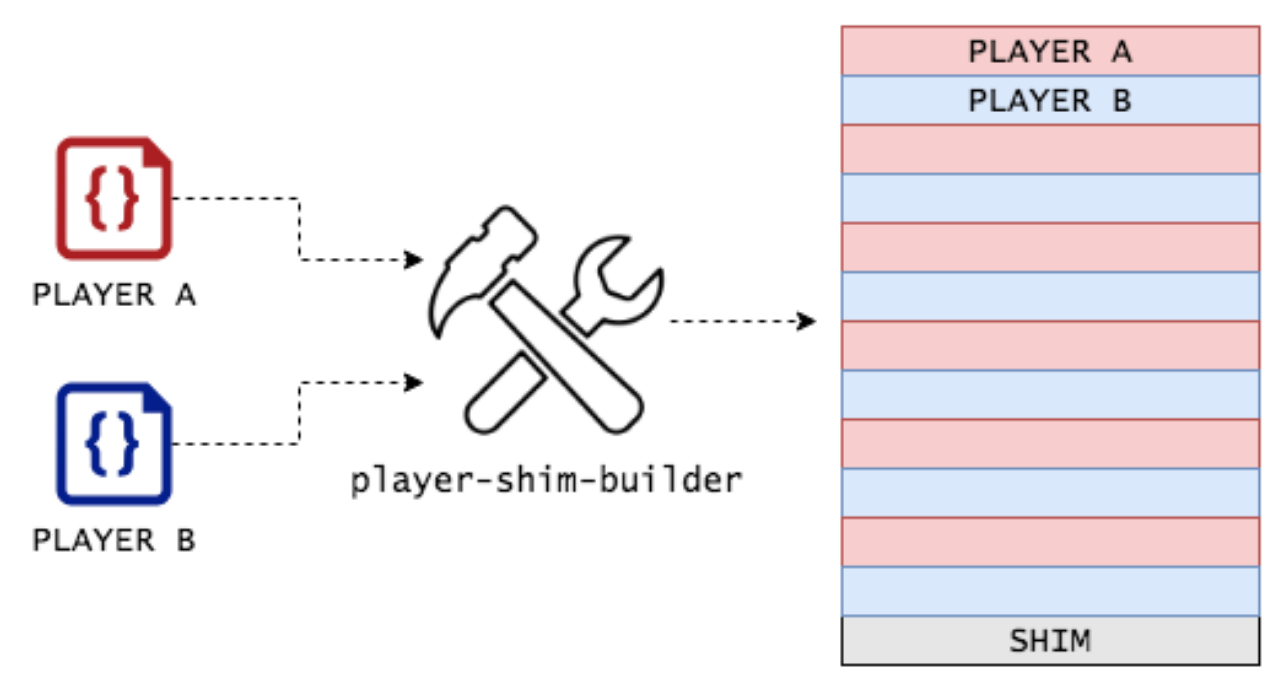

Brightcove Playerの観点から見ると、これは非常に重要です。通常、バージョン間で変更されるのは全体のプレーヤーコードのごく一部です。A/Bテストの場合、AとBのバージョン間でほとんどすべてのコードは同一です。2つのプレーヤーコードを単に連結するのではなく、player-shim-builderは各プレーヤーコードベースを小さなセクション、つまり「ストライプ」と呼ばれる部分に分割し、それらをバンドルパッケージに織り交ぜることができます。Player Aの各ストライプは、Player Bのソースコードにおける対応するストライプと非常に似ていることが多いです。

A/B テスト用にプレーヤーを「ストライピング」すると、配信されるプレーヤのサイズに劇的な効果があります。連結メソッドを使用してテスト プレーヤーを作成すると、index.min.js のサイズは 372 kB になりますが、ストライピングを使用すると 212 kB になり、配信されるバイト数が 43% 削減されます。

しかし、私たちが配信しているストリップされたプレーヤー コードは、すぐには使用できません。動画を再生するには、ページのロード時にプレーヤーをデストリップし、実行可能な JavaScript に評価する必要があります。次の表は、初期のテスト期間における、いくつかの主要なデバイスとブラウザのデストリップ時間の内訳です:

| ブラウザ | 装置 | 選手の負荷 | デストライピング時間 (90パーセンタイル)|160kBのダウンロードにかかる時間 (90パーセンタイル) |

|

| Safari | iOS | 82629698 | 6ミリ秒 | 311 ms |

| クローム・モバイル | アンドロイド | 72502892 | 16ミリ秒 | 189ミリ秒 |

| クローム | ウィンドウズ10 | 20244826 | 4ミリ秒 | 65ミリ秒 |

| サムスンブラウザ | アンドロイド | 9447000 | 16ミリ秒 | 256ミリ秒 |

| エッジ | ウィンドウズ10 | 6689872 | 5ミリ秒 | 63ミリ秒 |

| Safari | オーエスエックス | 6762600 | 3ミリ秒 | 106ミリ秒 |

デストライプ時間を考慮した後でも、プレーヤーのロード時間は、すべての主要なブラウザとデバイスにおいて、連結よりも大幅に高速であることがわかりました。Brightcove プレーヤーの特定のエンド ユーザーは、A/B テスト中に顕著な遅延を感じなくなりました。プレーヤー配信戦略を最適化することで、ほぼすべてのシナリオでプレーヤーの初期化時間を短縮しました。

A/B 比較テストのためにプレーヤーをストリップすることで、プレーヤー配信が遅くなる心配がなく、より長いテストを実行できるだけでなく、東部標準時の開発者に合わせた以前のテスト ウィンドウの外にテストを拡大することができます。プレーヤーを剥離して A/B テストを強化することは、ブライトコーブがグローバルなプレーヤー配信の限界に挑戦するために行った多くの改善の 1 つです。

よくあるご質問

なぜ選手をストライプにするのか?なぜ実行時に選手を選択しないのか?

ブライトコーブのプレーヤー コードには、プレーヤーの同期インスタンス化が長年にわたって保証されています。埋め込みコードを指定すると、プレーヤーがインスタンス化された後に呼び出されるスクリプト タグはすべて、プレーヤー オブジェクトを参照することが保証されます。

ストライプの大きさはどうやって決めたのですか?

ほとんどのブラウザによるgzip圧縮のスライディングウィンドウは32kBです。選手のパブリッシング時間に影響を与えずにストライプ間の繰り返し文字数を最大にするため、各AまたはB選手からのコードには16kBのスライディングウィンドウを使用します。正しい長さが選択されていることを確認するため、さまざまな異なるストライプ・サイズでテストしました。

ストリップ解除の時間とダウンロードの速度はどう比較しましたか?

プレーヤー配信のダウンロード速度は、さまざまな理由で入手できませんでした。その代わりに、再生ビットレートを使用して、プレーヤーコードがエンドユーザーによってダウンロードされる速度を推定しました。