Tech Talk: Brightcove Player Delivery Improvements

Tech Talk

The Problem

New versions of the Brightcove Player are released all the time with the most up-to-date technologies in order to provide best playback experience. In order to get real-time analytics for new changes to the player, Brightcove runs A/B comparison tests to preview the impact of these changes. This blog post will provide an overview of how our A/B testing process works and how we’ve been making improvements to ensure the player is always delivered as efficiently as possible.

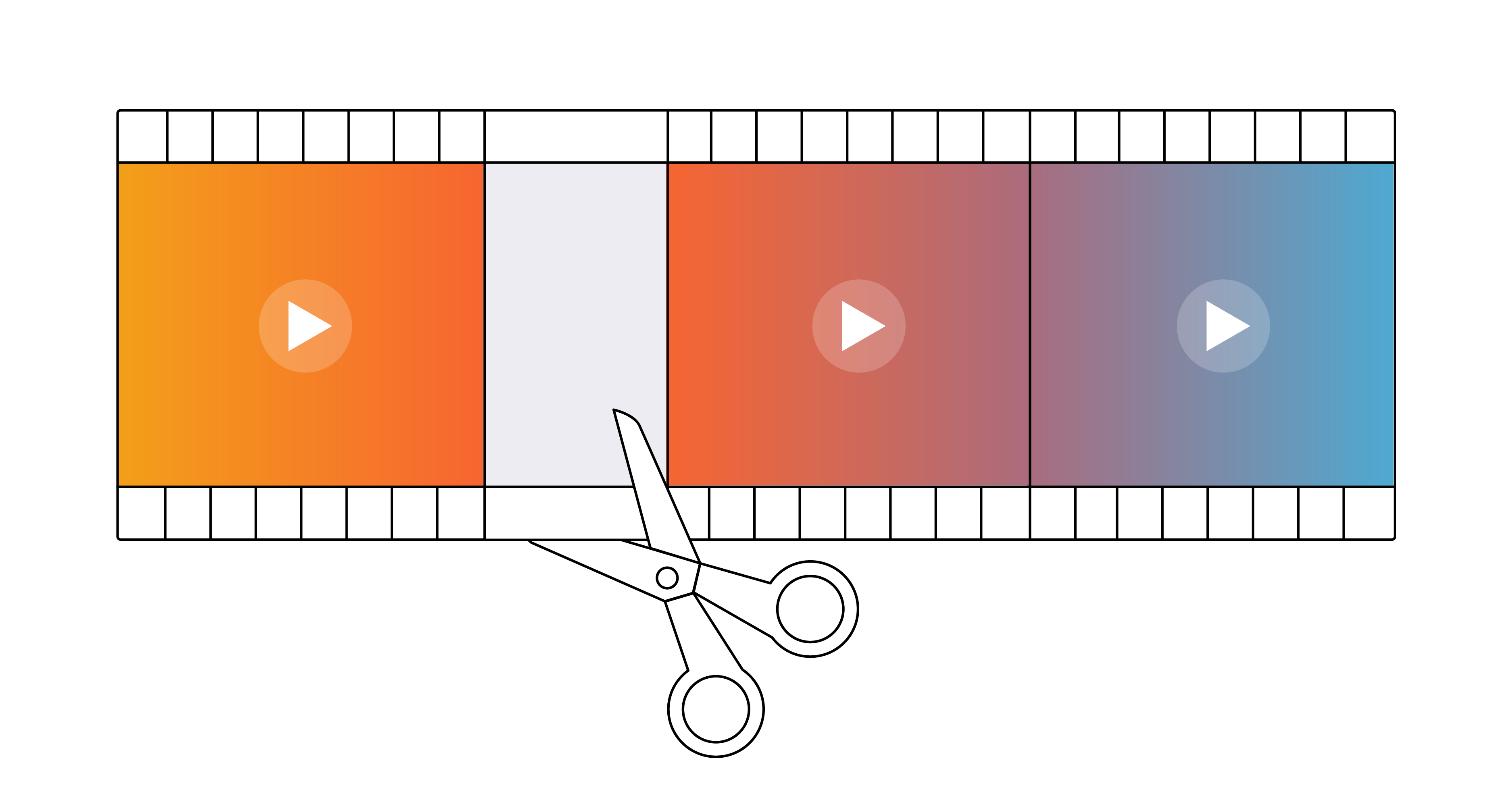

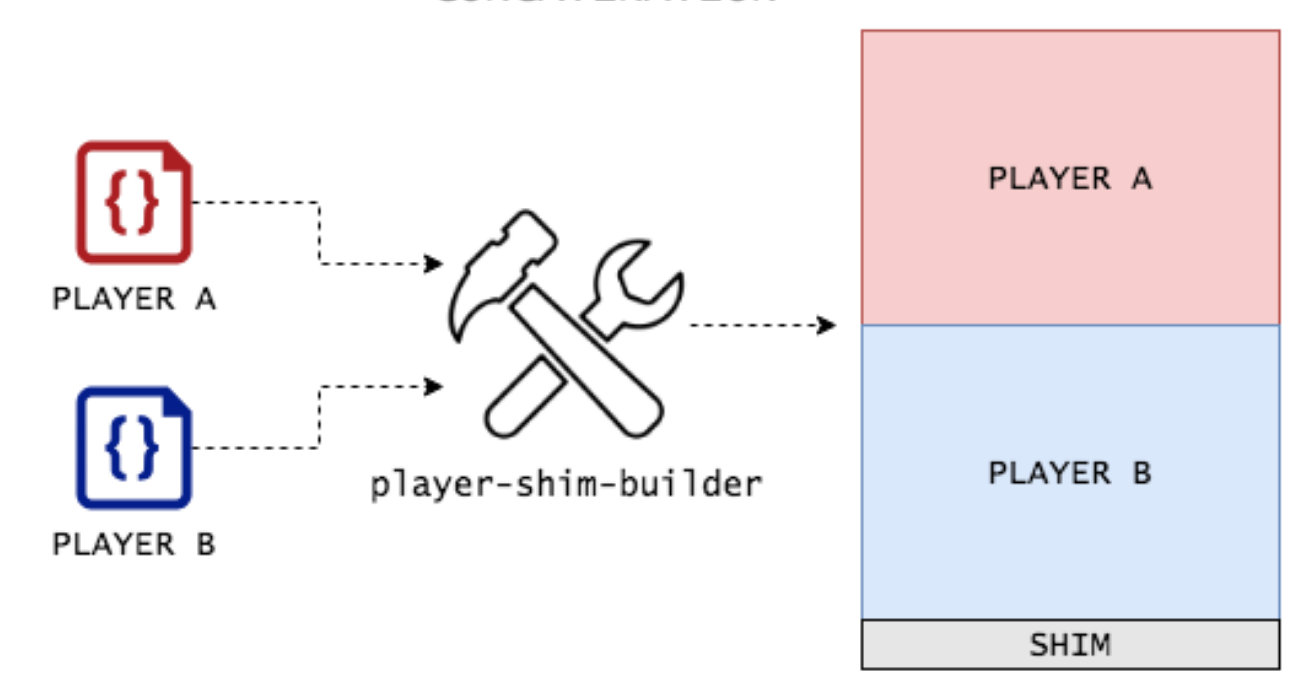

Historically, A/B comparison testing has been achieved by bundling together the A and B versions for each player with automatic updates enabled prior to a full release. From a technical perspective, this was done by concatenating the A and B player source code and adding a small “shim” to decide which player to execute at runtime through a project we call the “player-shim-builder”:

After careful analysis of metrics reported during the A/B test, the new Brightcove Player version was either made globally available or rolled back for future consideration. While this system achieved its goal, it had a major problem - bundling together two players doubled the size of the payload for end users. Anyone with a slow internet connection or an old mobile phone could notice a small increase in player load times from our A/B testing. In order to facilitate long-term A/B testing of our player, something had to change.

A New Hope

Before I reveal the secret sauce for how we addressed this issue, it’s important to get an understanding of the Lempel-Ziv coding (LZ77) compression algorithm and its role in delivering the Brightcove Player. Virtually all major browsers support it through the gzip Content-Encoding HTTP Header. While a deep dive into the technical details of the algorithm is outside the scope of this blog post, the algorithm compresses data by finding series of repeated characters and using special tokens to refer to these shared bits. Optimal compression ratios are achieved when data with very similar characters are in close proximity.

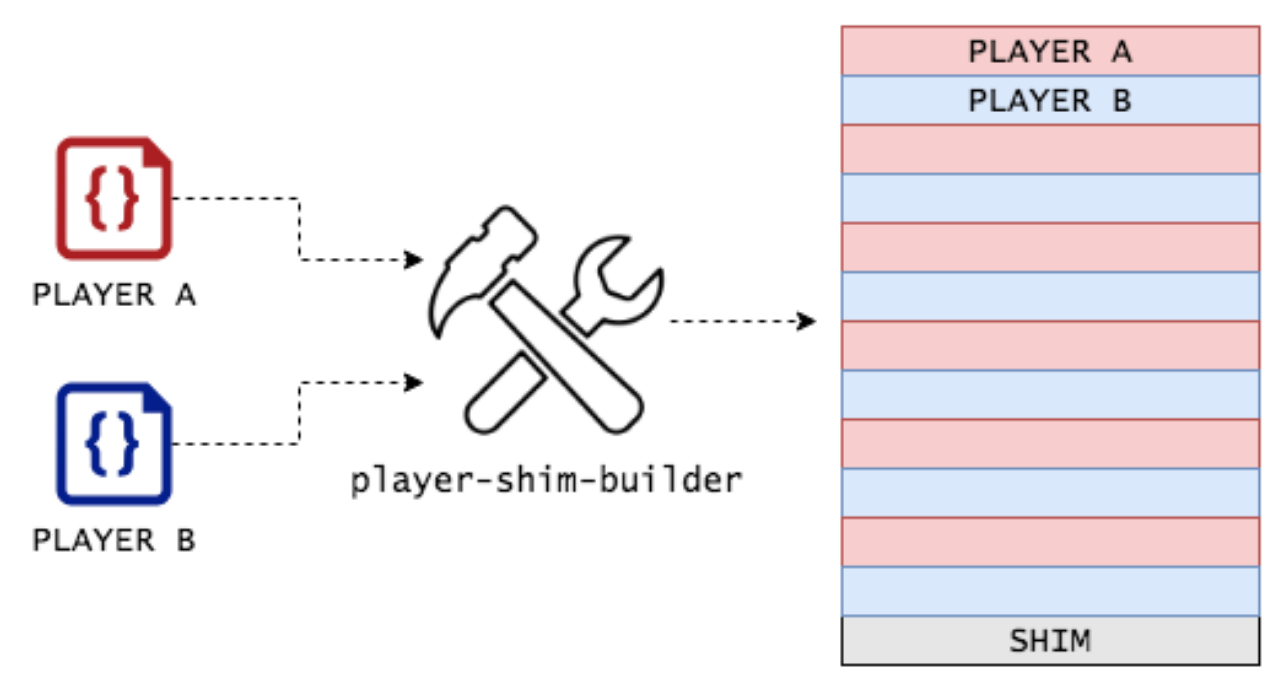

From a Brightcove Player perspective, this is key—usually only a very small percentage of the overall player code changes between versions. In the case of an A/B test, almost all of the code between the A and B versions is identical. Rather than concatenating the two player codes together, the player-shim-builder can divide each player codebase into small sections, or "stripes," of the source code, and interweave them into the bundled package. Each stripe from Player A is likely to be very similar to the corresponding stripe from Player B’s source:

“Striping” the player for A/B testing has a drastic effect on the size of the player delivered. Using the concatenation method to create a test player results in an index.min.js size of 372 kB, while striping it reduces that size to 212 kB - a 43% reduction in bytes delivered.

However, the striped player code we are delivering is not immediately usable. In order to play video, the player must be de-striped when the page loads and evaluated into executable JavaScript. The following table presents a breakdown of de-striping times for a few major devices and browsers during an initial testing period:

| Browser | Device | Player Loads | De-Striping Time (90th Percentile) | Time to Download 160 kB (90th Percentile) | |

| Safari | iOS | 82629698 | 6 ms | 311 ms |

| Chrome Mobile | Android | 72502892 | 16 ms | 189 ms |

| Chrome | Windows 10 | 20244826 | 4 ms | 65 ms |

| Samsung Browser | Android | 9447000 | 16 ms | 256 ms |

| Edge | Windows 10 | 6689872 | 5 ms | 63 ms |

| Safari | OSX | 6762600 | 3 ms | 106 ms |

Even after accounting for de-stripe times, we found player load times to be significantly faster than concatenation across all major browsers and devices. Certain end users of the Brightcove player no longer have noticeable delays during A/B testing. By optimizing our player delivery strategy, we reduced the initialization time for our player in almost all scenarios.

Striping the player for A/B comparison tests allows us to not only run longer tests without the fear of slowing down player delivery, but also to expand our tests outside of our previous testing windows tailored to developers on Eastern Standard Time. Striping the player to enhance A/B testing is one of the many improvements Brightcove has made to push the limits of global player delivery.

FAQ

Why stripe the player at all? Why not select the player at run-time?

- The Brightcove player code has a long-standing guarantee for synchronous player instantiation. Given an embed code, any script tags invoked after the player is instantiated are guaranteed to refer to a player object, and selecting the player code from a remote asset does not provide similar invariants.

How did you determine the size of the stripes?

- The sliding window of gzip compression by most browsers is 32 kB. In order to maximize the number of repeated characters between stripes without impacting player publishing time, we use a sliding window of 16 kB for code from each A or B player. We tested on a variety of different stripe sizes to ensure the correct length was selected.

How did you compare de-striping time to download speed?

- Player delivery download speeds were unavailable for a variety of reasons—instead, we used playback bitrates to estimate the rate at which the player code is downloaded by end users.