For those of us in the cloud computing world, the most exciting thing that came out of Google I/O in 2012 wasn’t skydivers wearing Glass, and it wasn’t a new tablet. The big news was that Google is getting into the cloud infrastructure-as-a-service space, currently dominated by Amazon Web Services (AWS). Specifically, Google has launched a new service called Google Compute Engine to compete with Amazon EC2.

This is exciting. The world needs another robust, performant, well-designed, cloud virtual machine service. With apologies to Rackspace and others, this has been a single-player space for a long time—EC2 is far and away the leader. Google obviously has the expertise and scale to be a serious competitor, if they stick with it.

How does it look? Early reports are positive. Google Compute Engine (GCE) is well-designed, well-executed, and based on infrastructure Google has been using for years. Performance is good, especially disk I/O, boot times, and consistency, which historically haven’t been EC2’s strong suit. But how well suited is GCE for cloud video transcoding? We have some preliminary results, acknowledging that more testing needs to be done. Here are some basic tests of video transcoding and file transfer using Zencoder software on both GCE and EC2.

Raw Transcoding Speed

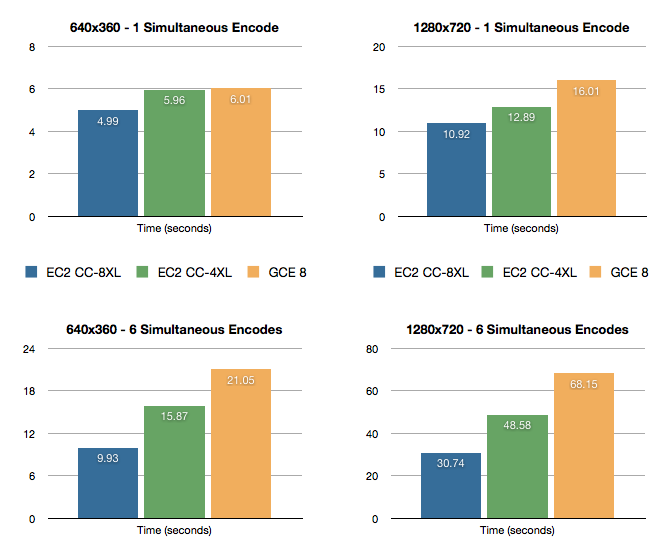

Performance is our top priority, so Zencoder uses the fastest servers we can find. On EC2, we use Cluster Compute instances, which are fast dual-CPU machines in two sizes: 4XL and 8XL. We compared these with the fastest GCE instance type, which is currently a single-CPU 8-core server.

| Server | CPU |

|---|

| GCE 8-core | Intel Xeon (Sandy Bridge – probably E5-2670) – 8 cores @ 2.60GHz |

| EC2 cc1.4xlarge | Dual Intel Xeon X5570 – 8 cores @ 2.93GHz/core |

| EC2 cc2.8xlarge | Dual Intel Xeon E5-2670 – 16 cores @ 2.60GHz/core |

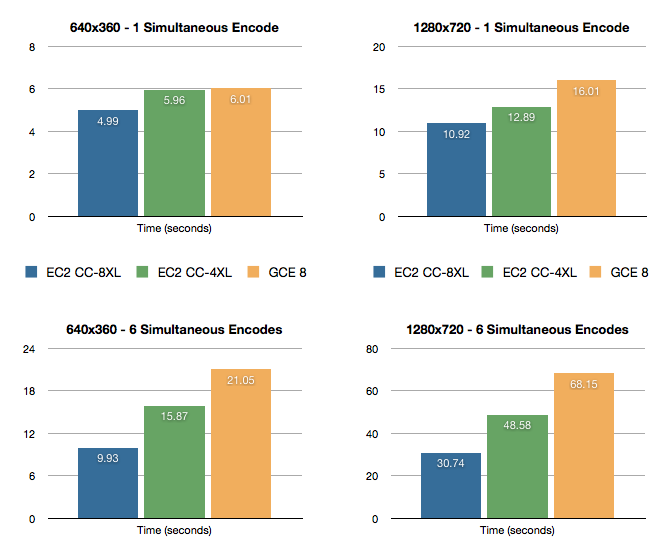

These tests were done using an H.264 source video at 640×360 and 1280×720 resolutions, and were encoded by Zencoder using the same single-pass output transcoding settings (H.264 Baseline profile, AAC, one-pass Constant Quality transcoding, etc.).

| Server | Resolution | Simultaneous Encodes | Time (seconds) | Cost per thousand |

|---|

| EC2 cc1.4xlarge | 640×360 | 6 | 15.87 | $0.96 |

| EC2 cc2.8xlarge | 640×360 | 6 | 9.93 | $1.10 |

| GCE 8-core | 640×360 | 6 | 21.05 | $1.13 |

| GCE 8-core | 640×360 | 1 | 6.01 | $1.94 |

| EC2 cc1.4xlarge | 640×360 | 1 | 5.96 | $2.15 |

| EC2 cc1.4xlarge | 1280×720 | 6 | 48.58 | $2.92 |

| EC2 cc2.8xlarge | 640×360 | 1 | 4.99 | $3.33 |

| EC2 cc2.8xlarge | 1280×720 | 6 | 30.74 | $3.42 |

| GCE 8-core | 1280×720 | 6 | 68.15 | $3.66 |

| EC2 cc1.4xlarge | 1280×720 | 1 | 12.89 | $4.65 |

| GCE 8-core | 1280×720 | 1 | 16.01 | $5.16 |

| EC2 cc2.8xlarge | 1280×720 | 1 | 10.92 | $7.28 |

Using default Zencoder settings, both types of EC2 instance are faster than GCE. The economics are a bit closer, and there isn’t a clear winner between 4XL EC2 instances and GCE. So GCE is a viable option for transcoding where cost is a higher priority than raw speed, though AWS customers can make use of Reserved Instances and Spot Instances for further cost reductions. We noticed that the 16-core EC2 instances were roughly twice as fast as GCE 8-core instances when under load with 6 simultaneous transcodes.

Given the similar clock speeds, but half the number of cores, this is what you would expect. However, if Google adds similar 16 core machines, they could have comparable transcoding speeds.

Transfer Speeds

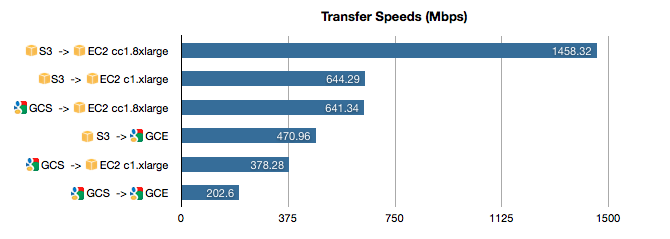

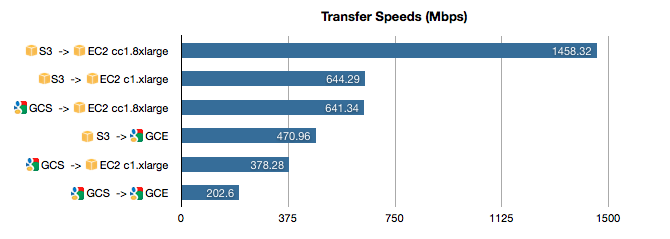

When transcoding video in the cloud, network I/O is almost as important as CPU. This is especially true for customers working with high-bitrate content (broadcasters, studios, and creatives). So how do GCE transfer speeds compare to EC2? To test this, we ran four sets of benchmarks:

- Amazon S3 to Amazon EC2

- Amazon S3 to Google Compute Engine

- Google Cloud Storage to Amazon EC2

- Google Cloud Storage to Google Compute Engine

We did this by testing the same 1GB video file stored on Google Cloud Storage (GCS) and on Amazon S3. Transfer was performed using 10 HTTP connections (Zencoder does this by default to optimize transfer speeds, and it can dramatically speed up large file transfers over HTTP).

| | Transfer speed (Mbps) | Server Bandwidth |

|---|

| S3 to GCE | 470.96 | 1 Gbps |

| S3 to EC2 c1.xlarge | 644.29 | 1 Gbps |

| S3 to EC2 cc2.8xlarge | 1458.32 | 10 Gbps |

| GCS to GCE | 202.60 | 1 Gbps |

| GCS to EC2 c1.xlarge | 378.28 | 1 Gbps |

| GCS to EC2 cc2.8xlarge | 641.34 | 10 Gbps |

This is interesting. We expected Amazon-to-Amazon transfer to be fast, which it was. But we also expected Google-to-Google transfer to be fast, which it wasn’t. In fact, it appears that GCS is slower than S3, and GCE transfer is slower than EC2, such that even if you’re using Google for compute, you may be better off using S3 for storage. Transfer was 2.3x faster from S3 to GCE than from GCS to GCE.

More Tests Needed

Consider these results preliminary. Further testing needs to be done to take into account more variables.

- Instance-to-instance differences. This is especially true for file transfer, which can vary widely based on network conditions and instance variability.

- Additional applications. These benchmarks only cover transcoding, which is a CPU-bound benchmark. Other applications are limited by disk, memory, etc., and these tests don’t speak to anything other than transcoding.

- Scalability. Scalability is extremely important for anyone using the cloud for video transcoding. More tests are needed to see how GCE compares with EC2 when it comes to enormous scale—tens of thousands of servers (or more). At what point do users run into capacity issues? Performance problems? Design limitations? Instability?

Cloud Infrastructure Future

Even though EC2 wins in these early tests, we’re excited about Google Compute Engine. To be a serious competitor for high-performance transcoding, Google needs to add larger instances with faster CPUs. But adding new instance types is easy. Nothing prevents Google from doing this. What is hard is building a robust, performant, feature-complete, scalable cloud platform, and Google seems to have succeeded. If Google is committed to this product and developers for the long run, the cloud virtualization world may have just gotten a second legitimate player.